Every network manufacturer has had some CVE for something.

Nope. I don’t talk about myself like that.

Every network manufacturer has had some CVE for something.

Use anything… Mailcow or otherwise. Just don’t expose the ports on your firewall/router to connect back to you.

I have 4 seed boxes I run on pia. My only issue is that the port changes from time to time. I have to check on them every week or so. It’s also one of the only court tested Vpns, though it did change hands after that

Edit: Turns out the pia client has a bash accessible command to get the active port. And Qbittorrent has a curl-able target to set the value. One bash script and a crontab… and now I don’t ever have to deal with the port changes anymore. You’re welcome leechers!

and seeding seems to work for me.

You can only seed to people who have ports open. At least one side of the connection needs to be reachable.

It’s people like me who keep ports available that are able to seed to you.

Well… No offense… but duh? It’s not like OP can migrate his spouses “Spouse@gmail.com” address to his mail server.

I was under the assumption (and I could be wrong) that OP owns the domain… And wants to run their mailboxes. If she wants to keep her own mailbox and use it, just forward it to her gmail if that’s what she wants. I’m also not insinuating forcing someone into something.

I own my domain(you guessed correctly) and host my own emails. My spouse does use an inbox on my server(actually a few)… If she didn’t want to anymore she can open a mailbox where-ever she wants… and I’ll even forward whatever I get to her. That’s it. Wouldn’t stop me from running my own inbox on my own server. And I’m not forcing her to do anything at all. She can use it or not.

This is the mentality I have when I made the previous comments. Just forward her stuff off, she can go wherever she wants.

Until the basement floods and the server goes offline for a few days

That’s what backups are for.

or botched upgrade that’s failing quietly;

See above

over zealous spam assassin configuration;

That’s an assumption that you’re using this specific product.

What’s funny is you think that all of this can’t happen to your stuff on Google’s servers either for some reason… Say the wrong thing in a Youtube comment? Boom whole google profile banned. All your emails are gone too.

Or random software that interfaces poorly with each other (https://support.google.com/mail/thread/142335843/all-of-my-emails-have-disappeared-how-can-i-recover-them?hl=en)

SMTP is stupidly forgiving. You’re not going to magically lose singular emails.

But one thing is for sure, my wife won’t have any of it. She’s a total backwards thinking give me windows or I’ll jump kind of Gal.

So… forward her inbox to her personal gmail account? Keep your mail server as it was for you?

SteamDeck (and other handhelds like that) are super convenient to demo things out on. Don’t have to lug entire systems to trade shows. Just load up a dozen decks in a bag preloaded with whatever the most stable or latest build of your game is.

It makes sense for these trade shows. More of your game gets into people hands for that crucial demo. Less time wasted waiting in queue at the 2 PCs you brought instead.

Oftentimes that comes out of department budgets. That’s not necessarily 100% tuition funded.

Edit: meaning printer stuff… my department had our own photocopy machine. It was a department asset.

You’ve forgotten about raid0…

I fed it to my AI and intend to sell access rights to it.

Ebay and decommission. I got really lucky on my SSDs, those were all from a decommission. Company was going to pay an ITAD for destruction. I picked it all up and wiped it on site. The rest are relatively cheap hardware, supermicros and such… but with enough of them you can build a resilient cluster.

A lot of my stuff is Ebay… I did recently purchase a new rack as probably the only “new” item I have in regards to my setup. The old one had issues… and I didn’t want to deal with thrifting broken racks anymore. And I needed a taller 45U rack rather than a 42U standard rack… Also the more depth means I can accommodate the 60 bay server in the future if it comes to that.

But things like 40gbps networking… ebay. The proxmox servers are decomissioned. the truenas server was ebay. switches was ebay… Oh! The firewalls… That was new purchase. I am stupid lucky to live somewhere with 8gbps fiber. I needed real horsepower to push that with IDS/IPS enabled. So this was a new purchase from supermicro. The SAS spinning rust drives I picked up on Reddit homelabsales or something like that a while back. PDU’s were ebay… UPS were ebay… Expansion batteries were craigslist. Most cables were new from FS

Previous versions of my rack were government liquidation/auctions. My dad has a lot of that equipment now. I found one auction that was 1400$ that was basically a whole racks worth of shit… most of it pretty usable 12 and 13th gen dells. And another auction for 600$ that had a dell m1000e with some 4TB of DDR4 ram…

But you can do a lot of this shit with a cluster of little N100 boxes if you really wanted. I just happened to get my hands on enterprise level equipment… So I joined the Romans…

I do not have full proper offsites… yet.

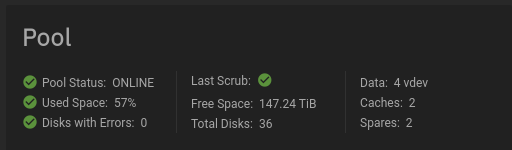

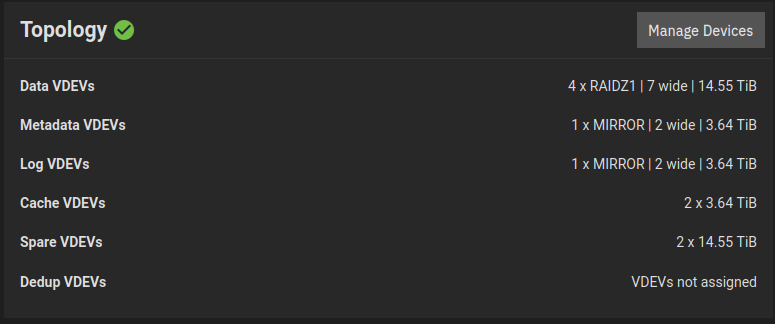

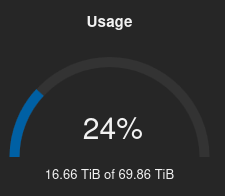

I run proxmox, so if it’s live on a server it’s probably on my ~70TB (really 40*2TB ssd) ceph cluster. Which makes 3 copies across the 5 boxes, so it’s more like 23TB of usable space for all my vms and such. The 400TB of storage is Truenas is really closer to 300TB after all the losses in raidz vdev and hot spares and what have you, there’s 30x 16TB SAS seagates in the box, of which 2 are hot spares and 7 are parity for raidz1… For things that are slow or linear loads (a movie file could be a good example of that type of workload!). Backups of the the proxmox boxes… and mass stored stuff, 99% of it I could easily obtain again if I had to. Although I’d probably be pretty flustered about it.

Truly important stuff gets written to 100GB bluray(s) (specifically m-disc blurays) and put in the safe. I do this probably about once a year or so…

My dad was in the process of setting up his own cluster that’s running 14TB drives rather than my 16TB… When he’s finally done I intend to requisition probably about half of his space for offsite storage (maybe more). I’m figuring about 100TB of space is what I’ll have there. Maybe more. He’s about 65 miles away from me, different electrical grid and all.

So the count as it stands now. Everything running has at least 2 copies on 2 mediums (ceph cluster, and spinning rust). My “linux iso” repositories only live on the spinning rust storage, but is low priority anyway. Super important highly sensitive shit lives on at least 3 copies and 3 mediums, although one of the mediums may be out of date and none is offsite… Though it’s rare I add to this category. There is plans for adding another copy of data, offsite on harddrive storage for most of my dataset as it is now.

Truenas usages:

And here’s Ceph

I have to really dislike something to delete it.

The velma tv show was the last item I just deleted.

But for me this is the same story. I’m up to 400TB… I’m just over half full. I’ve got plenty to go, and if I make to to 75-80% full, then I’m going to get me a 45 or 60 bay server and upgrade from my 36 bay one. 6 of the bays are wasted on SSD caching currently… Just finding a chassis that doesn’t waste the 3.5 inch bays on 2.5 drives would allow me to add a full vdev(another 100TB…).

Old chassis can be had on ebay relatively cheaply.

Oh god this make so much more sense…

I had read a comment from OP talking about random shit, then ran into this thread… I thought they just lost their shit. I still wonder… but a lot less now.

If it’s a raidz, you can.

Yes, but at the very least they have to do queries to build that profile out across dozens or hundreds of recipients… And they only get what I explicitly sent to them/their users.

Google collects 100% of the emails you’re getting on gmail and it’s already sent directly to you… so they see it completely… including emails being sent to other sources since it originates from their server (so collecting information that would be going to an MS Exchange server as well…).

Self hosting this means that you’re collecting your own shit… And companies can only get the outgoing side to their users. And never the full picture of your systems/emails.

This matters a lot more than you think. Lots of systems for automation sends through systems like Mailchimp, PHPmailer, etc… So those emails from your doctor likely never originated from MS or Google to begin with. When it hits your inbox on Gmail or Outlook… Well now it’s on their system. Now they can analyze it.

Mailcow.

Personally. No. The hardest part is getting a clean IP and to setup PTR records for a static IP. The rest has been easy for me personally… but I do this shit for a living so I might be biased.

You said you’re using OPNSense for routing… Just keep it up to date and you’ll be fine.

If you’re worried about your ap, I think you can set omada APS to restart nightly… Though I could be misremembering.